From Context Engineering Back to Vibe Coding? The Evolving Future of AI Development

Hey, AI trailblazers! If you caught my last piece on Context Engineering vs. Vibe Coding, you know it struck a chord—pun intended, since we're talking vibes. That article unpacked the shift from casual, intuitive AI jams (vibe coding) to structured, reliable systems (context engineering). But what if the pendulum swings back? Could advanced AI make vibe coding viable again, reducing our reliance on meticulously engineered contexts? I've dug into the latest trends, discussions, and expert takes to explore this. Spoiler: We're not ditching context engineering anytime soon, but the future might blend the best of both worlds in ways that'll make development feel like pure magic. Let's vibe through it.

Recap: Vibe Coding vs. Context Engineering – The Core Vibes

For the uninitiated (or those needing a refresher), vibe coding is that exhilarating flow where you chat with AI in plain English—"Hey, build me a todo app with dark mode vibes"—and it spits out code. It's AI-assisted prototyping at its most fun, popularized by folks like Andrej Karpathy, where natural language turns into functional software without sweating syntax. Think of it as jamming on a guitar: intuitive, creative, but prone to off-key notes if you're not careful.

Context engineering, on the flip side, is the grown-up version. It's about curating the perfect setup for AI: prompts, tools, data retrieval (like RAG), memory, and workflows. Instead of hoping the AI "gets the vibe," you engineer the environment so it can't miss—like giving a chef a stocked pantry, recipe, and timer. It's why enterprises are adopting it for production-grade AI agents, as it tames hallucinations and boosts reliability.

Right now, vibe coding shines for quick prototypes but flops in complex scenarios, while context engineering scales but feels less "vibey." But could smarter AI flip this script?

Why We Need Context Engineering Now: The Reality Check

In 2025, context engineering isn't optional—it's essential. LLMs are beasts at pattern-matching, but without the right context, they hallucinate, forget, or just plain whiff it. Surveys and dev reports show that AI failures often stem from poor context, not weak models. Vibe coding? It's great for solo hackers or non-tech folks brainstorming ideas, but in team settings or production, it leads to "slop code"—buggy, unmaintainable messes that need human fixes.

Take Coinbase: They're pushing for 50% AI-generated code, but it's reviewed and contextualized, not pure vibes. On X, devs rant that vibe coding hits walls with large codebases—AI loses track, and you're back to manual tweaks. Context engineering fixes this by injecting tools (APIs, databases), memory (short/long-term), and reasoning chains, making AI agents autonomous and reliable. It's why "prompt engineering is dead; context engineering reigns."

In short, today's AI needs hand-holding. Vibe coding feels freeing but often ends in frustration—like trying to direct a movie with no script.

Could Vibe Coding Make a Comeback? Peeking into the Crystal Ball

Here's the intriguing part: As models evolve, vibe coding might reclaim the throne. Experts predict that with bigger context windows (millions of tokens incoming), multimodal inputs (vibe with images/videos), and self-improving agents, AI could "get" your intent without explicit engineering. Imagine describing an app in casual chat, and AI auto-fills context from your past projects, web searches, or even user behavior—turning vibes into polished products.

Some foresee "vibe designing" over coding: GUI-based tools where you sketch or talk, and AI handles the rest, no code visible. In robotics or Web3, vibe coding could power adaptive systems that evolve on-the-fly. X threads buzz about non-tech leaders prototyping via vibes, then handing off—hinting at a future where everyone "codes" intuitively.

But is this realistic? Sources say yes, but with caveats. Theorem-proving models are scaling faster than coders, potentially making proof-as-code seamless. Yet, many argue context engineering won't vanish—it'll automate, becoming invisible scaffolding for vibe-like flows. The consensus? Vibe coding could dominate for ideation and small apps, but complex systems will always need some engineering under the hood.

Current Platforms: Tools for Vibes and Structured Mastery

Let's get practical. Here's where you can play today, split by style.

Vibe Coding Platforms and Editors

These make casual AI jamming a breeze:

- Cursor: AI-powered IDE for natural language edits—perfect for "vibe edits" on existing code.

- Replit: Cloud-based playground; start with a prompt, AI builds and runs it. Ideal for beginners or quick prototypes.

- GitHub Copilot: Inline suggestions turn chats into code; great for flow-state coding in VS Code.

- Claude Code (Anthropic): Conversational builder; describe your vision, iterate in real-time.

- v0 by Vercel: Web-focused; vibe out UIs with prompts, deploys instantly.

- Bolt.new and Lovable: Niche tools for prompt-to-app; fun for non-devs experimenting.

These emphasize speed and intuition, but users warn: Review the code, or regret it later.

Context Engineering Platforms and Editors

For production muscle:

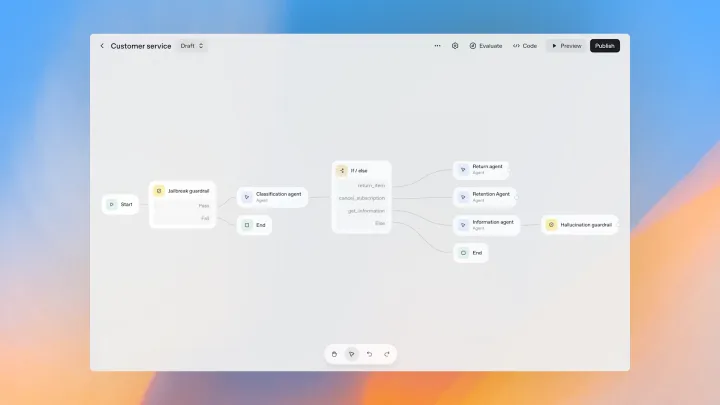

- LangChain/LangGraph: Frameworks for chaining prompts, tools, and memory—build agents that "think" step-by-step.

- LlamaIndex: Focuses on RAG and data ingestion; optimizes context from docs or DBs.

- Zep: Agent platform for chat history and business data curation—ensures personalized, accurate responses.

- PromptLayer: Tracks and optimizes prompts/contexts; great for debugging AI flows.

- DSPy: Underrated for compiling programs that auto-tune contexts.

These turn vibes into verifiable systems, often integrating with vibe tools for hybrid workflows.

How Platforms Might Evolve: Toward a Vibe-Coding Utopia

Fast-forward: As AI scales, platforms could merge vibes with auto-engineering. Imagine Cursor or Replit inferring context from your codebase, user data, or even brainwaves (okay, that's sci-fi... for now). Tools like Azure AI's adaptive translation hint at "multi-modal" vibes: Code via voice, sketches, or gestures.

Context platforms like LangChain might become "self-engineering," where you vibe a high-level goal, and it auto-builds the context pipeline. In crypto/Web3, vibe coding could spawn "vibe coins" tied to instant prototypes. But evolution won't kill context—it'll hide it, making development feel vibey while staying robust.

We're Not There Yet, But Approaching at Warp Speed

Truth bomb: Pure vibe coding for everything? Not today. Large codebases overwhelm AI, and security/ethics demand structure. X devs agree: Vibe for prototypes, context for production. But progress is blistering—models like o1 or future Groks could bridge the gap in months, not years. We're racing toward a world where vibes suffice, but for now, blend them: Vibe to start, engineer to finish.

Wrapping Up: Vibes, Structure, and the Road Ahead

Context engineering rules 2025 because AI needs guidance, but vibe coding's intuitive charm could resurgence as tech matures. Platforms are evolving to make this hybrid seamless, turning development into a creative jam session. We're not fully vibing yet, but the pace? Electric. Experiment with these tools, share your predictions below—what's your take on the vibe comeback?

Thanks for the read—now go vibe-engineer something epic! 🚀

Comments ()