Google Gemini API Free Tier: An In-Depth Analysis of Models, Usage Limits, Capabilities, and How to Get the Most From Them (September 2025)

Introduction

Artificial Intelligence is no longer the preserve of well-funded enterprises—in 2025, powerful, multimodal AI APIs are available to anyone with a browser and a Google account. Google’s Gemini API is at the center of this democratization, offering developers and curious minds access to sophisticated reasoning, code, and content generation capabilities on a generous free tier. But with the proliferation of new models (like Gemini 2.5 Pro and Flash), updates to Imagen 3, and a maze of daily caps and usage limits, understanding how to maximize your value from Google’s free offerings has never been more critical.

This blog post provides a comprehensive, up-to-date, and opinionated breakdown of the Google Gemini API free tier as of September 2025. We’ll review all currently available models (including Gemini 2.5 Pro, Gemini 2.5 Flash, Flash-Lite, and Imagen 3), examine their usage limits, capabilities, and differences, and present a visual feature comparison to help you select the best fit for your real-world needs. We’ll also share practical tips for stretching your free usage, showcase use cases designed to inspire, and offer a candid verdict on who Google’s free tier is (and isn't) best suited for.

Google Gemini API Free Tier Overview

What Is the Gemini API Free Tier?

At its core, the Gemini API free tier is Google’s developer-focused entry point to next-generation AI models. It allows anyone to experiment with large language models, multimodal reasoning, and basic generative functionality at no cost—subject to daily and per-minute rate limits that are tuned for prototyping and limited production use.

Access to the free tier is straightforward: developers sign up in Google AI Studio, generate an API key (linked to a Google account), and can immediately make calls to the suite of Gemini models without entering billing information. This removes the typical financial barriers to exploring AI, making sophisticated tools available to students, hobbyists, small businesses, and solo developers.

Key Points:

- No cost to generate and use the API key; actual usage determines when (if ever) you hit limits or must pay.

- Core models include Gemini 2.5 Pro (for advanced use), Gemini 2.5 Flash (for speed and cost), Flash-Lite (for even lighter tasks), and select capabilities of previous generations.

- Multimodal support (text, images, audio, video) is native to most of the latest models.

- Free tier covers almost all developer onboarding and testing needs.

What’s Not Included:

- Higher-performance, paid-only models (like Ultra and future “Gemini 3.0”).

- Some advanced image/video generation and audio features (Imagen 3, Veo 3) are excluded or severely restricted in the free tier.

- The free tier carries lower quotas and lacks enterprise-level data privacy.

Free Tier Usage Limits — The Facts, Figures, and Fine Print

How Google Enforces Rate Limits

Rate limits on the Gemini API are defined across three main dimensions:

- Requests Per Minute (RPM): The number of API calls you can make in a minute.

- Tokens Per Minute (TPM): Total tokens processed per minute (input/output combined); 1 token is roughly 4 characters or 0.75 English words.

- Requests Per Day (RPD): The sum of all requests from a project in a rolling 24-hour window.

When you exceed any of these caps, you’ll receive a “429 RESOURCE_EXHAUSTED” (rate limit) error. These limits apply per Google Cloud project, not per API key, so splitting usage across multiple keys within the same project does not circumvent quota.

Tip: Rate limits are re-assessed each minute and reset daily at midnight Pacific Time. Batch jobs, non-standard endpoints (like Live API), and experimental models may have separate caps.

Free Tier Usage Table (September 2025)

The following table summarizes the main free tier limits for the leading Gemini API models as reported in both official documentation and industry sources as of September 2025:

| Model / Feature | Requests Per Minute (RPM) | Tokens Per Minute (TPM) | Requests Per Day (RPD) | Context Window | Free API Access | Notes / Modalities Supported |

|---|---|---|---|---|---|---|

| Gemini 2.5 Pro | 5 | 250,000 | 100 | 1M tokens | Yes | Text, image, audio, video inputs |

| Gemini 2.5 Flash | 10 | 250,000 | 250 | 1M tokens | Yes | Text, image, audio, video (multimodal) |

| Gemini 2.5 Flash-Lite | 15 | 250,000 | 1,000 | 1M tokens | Yes | For high-throughput, low-cost tasks |

| Gemini 2.0 Flash | 15 | 1,000,000 | 200 | 1M tokens | Yes | |

| Gemini 2.5 Flash Live | 3 sessions | 1,000,000 | * | * | Yes | Multimodal, low-latency voice and video |

| Gemini 2.5 Flash Preview TTS | 3 | 10,000 | 15 | * | Yes (preview) | Text-to-speech; output: audio |

| Imagen 3 | — | — | — | — | No | Paid tier only |

| Gemini Embedding | 100 | 30,000 | 1,000 | * | Yes | Text embedding model |

| Gemma 3 / 3n | 30 | 15,000 | 14,400 | * | Yes | Open-weight LLMs for lighter tasks |

Limits vary for preview/experimental models. “” indicates not officially published for that dimension, or not universally available.*

Additional Contextual Free Tier Caps

- Image Generations (Imagen 3 / 4): Not included in the free tier. Image generation through Gemini interface is available, but via “Imagen 3” and “4” only on paid tiers.

- Deep Research: 5 reports/month (free), increases to 20/day (Pro), 200/day (Ultra).

- Audio Overviews: 20 per day, on all tiers.

- Context Window: ~32k tokens for free users; 1M (or more) for Pro/Ultra. Flash models always support 1M tokens in context for API but only ~32k in Gemini Apps.

- File Upload Limits: Up to 10 files per prompt, individual max 100MB (text/docs), videos up to 2GB/5 min for free users.

Rate Limit Enforcement Mechanisms

Google’s Gemini API uses a combination of static quotas (numeric limits imposed per tier) and dynamic throttling during periods of load or suspected abuse. If you exceed RPM, TPM, or RPD, all subsequent attempts are blocked for the remainder of that window. Dynamic down-tuning may occasionally occur even within published limits due to backend load, especially on the free tier.

Projects that reach the cap can:

- Upgrade to a paid tier (immediately expands available capacity).

- Request a quota increase (usually available only on paid billing accounts for API usage).

- Wait for reset (minute or daily, depending on which cap was reached).

Gemini Models on the Free Tier: Capabilities and Key Differences

Let’s break down the most relevant models and their unique strengths, weaknesses, and intended purposes. (See feature comparison table in the next section for a visual reference.)

Gemini 2.5 Pro

Overview:

Gemini 2.5 Pro is Google’s flagship “thinking” model—designed for maximum intelligence, deep structured reasoning, and multimodal understanding. This model excels at coding, data analysis, and tasks that require long chains of logic, combining both advanced language reasoning and cross-modal correlation (text + images/videos/audio).

Key Capabilities:

- Multimodal—accepts text, images, video, audio, and even complex tabular data.

- Context window: Up to 1 million tokens for API usage (with 32k limit enforced in free Gemini Apps).

- Advanced code generation and execution inside the model.

- “Deep Think” mode (ultra-long reasoning path, available to paid Ultra users), supporting creative and research-style multi-step outputs.

- API supports function calling, structured output (JSON), and tools.

Ideal For:

- Legal and medical analysis with long files.

- Software development: not just code suggestion but debugging and refactoring.

- Academic research, complex math, and scientific problem solving.

- Data-rich environments (CSV, PDFs, media assets).

Limitations in the Free Tier:

- Severely capped (5 requests/day on Apps; 100/day via API).

- Slower per-response latency (due to deeper internal reasoning and safety checks).

- No access to some paid-exclusive features (e.g., code execution in production).

Gemini 2.5 Flash

Overview:

While Pro is the cerebral heavyweight, Gemini 2.5 Flash is the “workhorse” model—built for speed, low cost, and massive throughput with good, (but not top-tier) reasoning. It allows developers and businesses to deploy AI in rapid, interactive or high-volume scenarios—think chatbots, real-time captioning, or mobile summarization.

Key Capabilities:

- Multimodal inputs: Text, audio, video, images, and PDFs.

- 1 million token context window (API).

- Blazing fast output; low latency.

- Hybrid reasoning: “Thinking budget” lets you control how much effort/spending to assign per prompt. Superior for balancing performance and cost.

- Core tool integration (file readers, calculators, etc.).

- Function calling and structured output for workflows.

Ideal For:

- High-frequency customer chat, support bots, virtual assistants.

- Summarization of emails, news, or meeting transcripts.

- Captioning media; real-time transcriptions with context.

- Lightweight code generation; rapid prototyping.

Limitations in the Free Tier:

- Lower daily and per-minute limits than paid tiers.

- Not full capabilities of “Deep Think”; massive inputs sometimes require chunking.

Gemini 2.5 Flash-Lite

Overview:

This model is the ultra-budget version of Flash: optimized for maximum throughput at lowest cost, sacrificing some reasoning (especially in “non-thinking” mode) for scale and API response speed.

Best For:

- Streamlined data extraction, light summarization, mass reporting.

- Use cases where quality can be traded for throughput (lead scoring, auto-tagging, etc.).

- Educational apps, code review hints, or high-volume checklist generation.

Limitations in the Free Tier:

- Daily limit is higher than regular Flash, but lower than paid.

- Restricted tool integration and output customization.

Imagen 3

Overview:

Imagen 3 is Google’s top text-to-image model, able to generate photorealistic visuals and complex compositions from natural language prompts. It features strong prompt adherence and high-quality text rendering in images. This makes it suitable for marketing creatives, prototyping, and content generation.

Access and Free Tier Status:

- Not available for free tier use via Gemini API as of Sep 2025.

- Some free image generation may be possible via consumer-facing Gemini Apps, but API-only/paid model for developers and production.

- For those with access, prompt quality, detailed descriptions, and style modifiers are vital for best results.

Older and Specialized Models

- Gemini 2.0 Flash / Flash-Lite: Still available for some backward-compatible integrations, with even higher rate limits and lower cost.

- Gemma 3 / 3n: Lightweight, open-weight models ideal for mobile and low-latency applications—excellent for fast, low-stakes inference.

Google Gemini API Free Tier: Feature Comparison Table

| Feature / Model | Gemini 2.5 Pro | Gemini 2.5 Flash | Gemini 2.5 Flash-Lite | Gemini 2.0 Flash | Gemma 3/3n | Imagen 3 (API) |

|---|---|---|---|---|---|---|

| Input / Modality | Text, audio, image, video, PDF | Text, audio, image, video, PDF | Text, image, video, audio | Text, audio, image, video | Text | Text-to-image (not free) |

| Output / Modality | Text | Text | Text | Text | Text | Image |

| Context Window (API) | 1M tokens | 1M tokens | 1M tokens | 1M tokens | 128K/32K | — |

| Context Window (Gemini Apps) | ~32K tokens | ~32K tokens | ~32K tokens | ~32K tokens | — | — |

| Maximum Output Tokens | 64K | 65K | 65K | 65K | — | — |

| Requests Per Minute (RPM) | 5 | 10 | 15 | 15 | 30 | — |

| Requests Per Day (RPD) | 100 (API); 5 (Apps) | 250 (API); — (Apps) | 1,000 (API) | 200 (API) | 14,400 (API) | 0 (not free) |

| Multimodal Support | Yes | Yes | Yes | Yes | No | Yes |

| Code Execution | Yes | Light, limited | No | Light | No | No |

| Reasoning Type | Advanced | Hybrid, budgeted | Basic, cost-optimized | Basic | Basic | N/A |

| Audio Output | No (API); Yes (TTS/Native paid) | Yes (limited) | No | Yes (limited) | No | No |

| Function Calling / Tools | Yes (full) | Yes (core) | No | No | No | No |

| Free Image Generation | Limited (in Apps) | Limited (in Apps) | Limited (in Apps) | Limited (in Apps) | No | *No |

| API Free Tier Access | Yes | Yes | Yes | Yes | Yes | No |

| Price per 1M Tokens (Paid Tier) | $1.25 (input), $10 (output) | $0.15 (input), $0.60 (output) | $0.10 (input), $0.40 (output) | $0.10/$0.40 | — | $3/image |

| Main Strength | Deep reasoning, code | Fast, cheap, balanced | High throughput | Real-time tasks | Lightweight | Photo-realism, style diversity |

| Updated As Of | September 2025 | September 2025 | September 2025 | September 2025 | September 2025 | September 2025 |

Table sourced and synthesized from primary Gemini documentation, AI Studio, and multiple recent reviews and guides for developers.

Visual Model Comparison and Analysis

Gemini 2.5 Pro represents Google’s most advanced “thinking” model in the free tier. It is optimized for accuracy over speed, supporting highly sophisticated reasoning, extended context, and native code execution. Its performance benchmarks (e.g., 88% on AIME 2025, 18.8% on Humanity's Last Exam) routinely places it at the top of industry evaluation leaderboards, outperforming prior-generation LLMs, GPT-4, and even some competitor paid models for complex academic and technical tasks.

Gemini 2.5 Flash gives up some reasoning prowess for a significant gain in output speed and cost efficiency, making it ideal as an embedded AI for summarization, live chat, and mobile use. Its hybrid reasoning mode allows granular control over how much cognitive effort is spent per answer: set a “thinking budget” for complex cases, or crank up the throughput with “non-thinking” for lightweight interactions.

Gemini 2.5 Flash-Lite is engineered for maximal throughput at almost negligible marginal cost, but cannot handle deep reasoning or complex code execution. Its main use is for workflows where the scale of inputs trumps the need for nuanced analysis.

Imagen 3 stands apart as one of the world's highest-performance text-to-image models, capable of generating visually stunning, high-resolution outputs with strong prompt adherence, flawless text rendering in images, and support for a wide stylistic palette. However, as noted, this is pay-walled for API developers and only lightly available in some front-end apps for free.

Real-World Use Cases for the Gemini Free Tier

Developer and Startup Scenarios

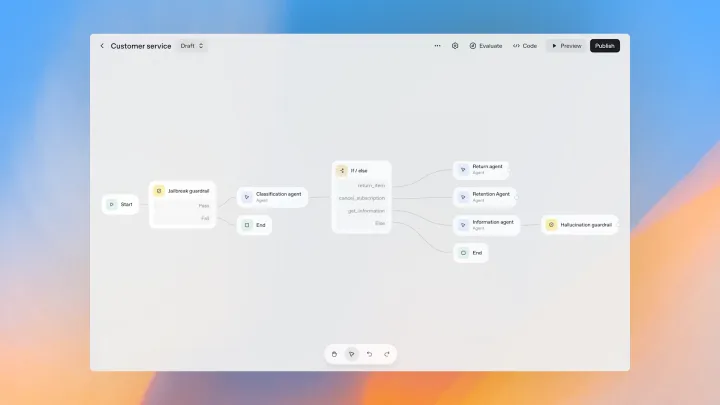

Rapid Prototype for Customer Support

A SaaS startup wants to build a customer support agent that can quickly escalate to “escalated thinking” on complex refund requests. The chatbot runs on Gemini 2.5 Flash for all routine queries (thanks to its speed and cost efficiency), but uses a single daily Gemini 2.5 Pro call to handle the rare, nuanced cases requiring deep reasoning or legal compliance, essentially “stretching” the free limit for maximum value.

Resume Extraction Tool

A developer creates a lightweight tool that parses uploaded PDF resumes. Gemini 2.5 Flash-Lite is used to extract job titles and dates for high throughput, while Pro is only used on “borderline” resumes that seem inconsistent, to conserve quota.

Student and Academic Projects

Summarizing Long-Form Research

Students utilize Flash’s 1 million token context window for summarizing research articles, grant proposals, or full ebooks. They hit the daily message cap only in the final hours before submission, learning to break massive files into sections and intelligently splitting prompts to respect the quota.

Math Competition Practice

A math coach uses the free tier to generate Olympiad-level problems, switching between models. Pro creates bespoke, reasoning-heavy math questions (with explanations), while Flash produces hundreds of “quick practice” questions daily at a fraction of the cost.

Content Creator and Small Business Use

SEO Content Sprints

A freelance marketer relies on the AI Studio free tier to generate blog outlines and metadata using Flash, reserving Pro for the pillar article deep dives. Image generation via Apps (when available) supplements posts with unique visuals.

Podcast Highlight Tool

Podcast producers leverage Audio Overview (20 daily limit) to automatically summarize episode key points, making repurposing content for newsletters rapid and free of charge.

Tips and Hacks to Maximize Your Free Tier Usage

1. Choose Models Strategically:

Don’t use Gemini 2.5 Pro for everyday smalltalk or summarizing a single paragraph. Use Flash or, for pure speed, Flash-Lite and 2.0 Flash-Lite. Only “escalate” to Pro for heavyweight logic, document analysis, or multi-step coding challenges.

2. Chunk Input to Respect Context Limits:

If your document exceeds 32k tokens (in Apps), chunk it into smaller sections and synthesize results from each chunk. For the API, utilize the extended context window of 1 million tokens whenever possible—this is uniquely valuable compared to models from OpenAI (GPT-4o supports only 128k max).

3. Use Caching and Batch Requests:

Batch mode lets you send up to 100 jobs concurently. Cache popular or semi-static requests (e.g., “summarize our FAQ”) so you don’t repeat API calls.

4. Implement Backoff Strategies for Rate-Limit Errors:

Automate exponential backoff (retry after 1, 3, 7, etc., seconds, up to 5 times) to gracefully handle quota errors and minimize failed calls.

5. Pre-Generate Outputs and Use at Off-Peak Times:

For content that isn’t real-time (such as lesson plans or articles), generate items during low-traffic hours—you’ll often see fewer “capacity reached” errors.

6. Monitor Usage Closely:

There is currently no built-in quota tracking for end-users, so track your consumption (via logs or manual tallies). In-production applications should surface usage to admins to avoid surprises.

7. Use Appropriate Model Variants:

If Gemma 3/3n suits your need (basic summary, lightweight API call), use it—it’s free and less encumbered by rate limits.

Regional Eligibility, Access Restrictions, and Privacy

Who Can Access the Free Tier?

As of September 2025, Gemini API and Google AI Studio are available in over 180 countries and territories, covering nearly all of North America, Europe, parts of Asia, Africa, and Latin America. However, access is geo-blocked in certain regions due to export controls or regulatory requirements.

- Age requirement: 18 years+ for Google AI Studio and API.

- Certain content/feature restrictions apply depending on local laws (e.g., image generation is disabled in some jurisdictions).

Data Privacy and Use:

Data submitted via the free tier may be used by Google to improve models and products; businesses or researchers needing strict confidentiality should use paid Vertex AI plans or negotiate custom contracts .

The Latest Updates and Future Models (Sep 2025)

The Gemini family is on the cusp of further evolution. Gemini 3.0 Pro and Flash are referenced in internal documentation and code leaks, with expectations of:

- Larger context windows (up to 2 million tokens),

- Real-time 60 FPS video support,

- Native 3D object/geospatial processing,

- And reasoning modes with automatic adaptive switching.

Currently, the free tier only includes 2.5 Pro and Flash as the most advanced models, but Google frequently rotates in preview models for developer testing and early feedback (with lower quotas and stability).

Veo 3 (video generation) and Imagen 4 (superior high-res image gen) remain paid features.

Real-World Scenarios: Impact and Practical Limitations

- Marketers: The 5 Pro prompts per day limit means that marketers must plan content “sprints” or opt for Pro (paid) when high-volume generation is critical.

- Researchers/Students: A 32k context window for free users is excellent for essays and reports but insufficient for multi-chapter textbooks—chunking is required.

- Developers: Programmatic/automated use is best for prototyping, with easy upgrading to paid tiers for commercial launch.

- Artists and Designers: Imagen 3/4’s paywall is a notable barrier for API-driven creative workflows on the free tier, though Apps-based generation may suffice for simple visuals.

Opinionated Verdict: Who Should Use the Gemini API Free Tier?

Best For:

- Students, tinkerers, and indie devs wanting to learn and prototype AI features with no cost or red tape.

- Small businesses and marketers with occasional, bursty needs for code, copy, or image prototypes.

- Researchers looking to quickly summarize, reason about, or dissect long texts and datasets.

Not Best For:

- Large enterprises: data privacy in the free tier is insufficient, and quotas are too restrictive for production.

- Professional creatives: limited access to advanced image/video models; paid plans needed for polished content workflows.

- Ultra-heavy users: scaling will require paid API use or negotiated enterprise contracts.

If you value flexibility, price transparency, and cutting-edge multimodal AI—and you're willing to strategize around limits—the free tier is the best playground in the AI landscape today. But for mission-critical, confidential, or high-output tasks, it's a whisker away from mandatory upgrades.

Final Thoughts

In a fast-moving AI ecosystem, Google’s Gemini API free tier remains a gold standard for open developer access. Its combination of model diversity, best-in-class multimodality, and advanced reasoning ability—albeit capped by rational limits—empowers a generation of builders and experimenters. The key is to know exactly what you’re getting, work within the boundaries, and be ready to upgrade as your ambitions (or audience) grow.

Start building. Stretch your quotas. Experiment with Gemini’s models and make the most of this unparalleled, low-barrier entry into the future of AI.

Comments ()